这篇文章主要介绍分别采用Camera1、Camera2、CameraX API接口获取Camera数据流,并集成ArcSoft人脸识别算法。

ArcSoft官方的demo是采用的Camera1接口,我前面也写过一篇单独Camera2 接口集成Arcsoft接口的文章(全网首发:Android Camera2 集成人脸识别算法)

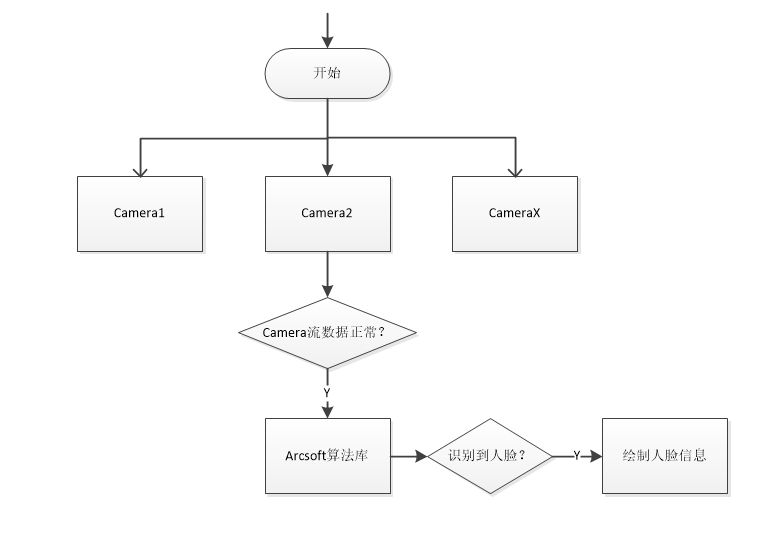

应用设计流程图01

如下图所示,应用流程比较简单,分别从不同的API接口获取到Camera数据流数据,然后送到ArcSoft人脸识别算法库中进行识别,最终将识别结果绘制到界面上。

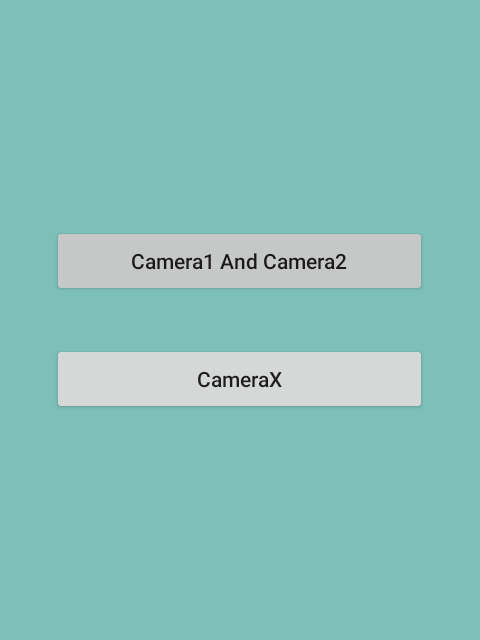

应用界面02

CameraX需要和界面生命周期进行绑定,所以主界面设计成了2个Button入口,一个入口是Camera1和Camera2共用,一个是CameraX独立的入口。

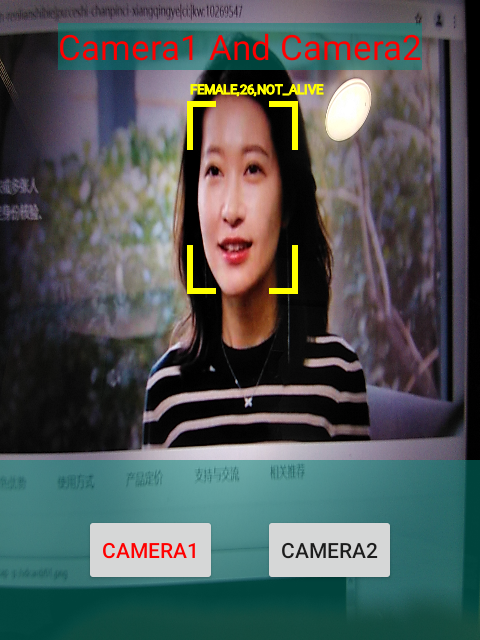

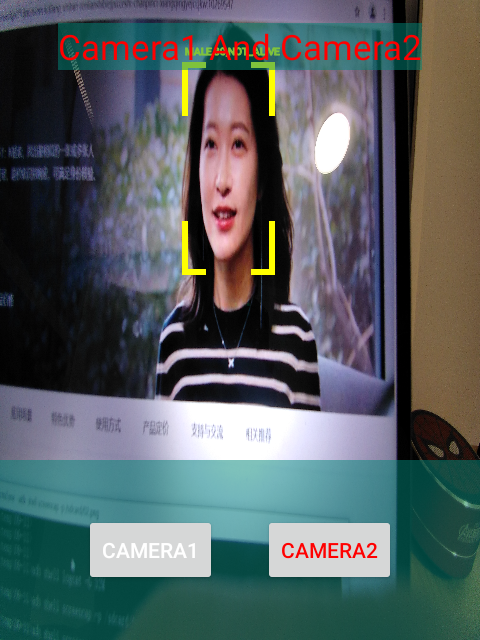

如下图所示:Camera1和Camera2之间可以互相切换。

CameraX是单独的界面。

代码实现03

1) Camera1 API的使用:

private void startCameraByApi1() {DisplayMetrics metrics = new DisplayMetrics();getWindowManager().getDefaultDisplay().getMetrics(metrics);CameraListener cameraListener = new CameraListener() {@Overridepublic void onCameraOpened(Camera camera, int cameraId, int displayOrientation, boolean isMirror) {Camera.Size previewSize = camera.getParameters().getPreviewSize();mPreviewSize = new Size(previewSize.width,previewSize.height);drawHelper = new DrawHelper(previewSize.width, previewSize.height, previewView.getWidth(), previewView.getHeight(), displayOrientation, cameraId, isMirror, false, false);}@Overridepublic void onPreview(byte[] nv21, Camera camera) {drawFaceInfo(nv21);}@Overridepublic void onCameraClosed() {}@Overridepublic void onCameraError(Exception e) {}@Overridepublic void onCameraConfigurationChanged(int cameraID, int displayOrientation) {if (drawHelper != null) {drawHelper.setCameraDisplayOrientation(displayOrientation);}}};cameraAPI1Helper = new Camera1ApiHelper.Builder().previewViewSize(new Point(previewView.getMeasuredWidth(), previewView.getMeasuredHeight())).rotation(getWindowManager().getDefaultDisplay().getRotation()).specificCameraId(Camera.CameraInfo.CAMERA_FACING_BACK).isMirror(false).previewOn(previewView).cameraListener(cameraListener).build();cameraAPI1Helper.init();cameraAPI1Helper.start();}

2) Camera2 API的使用:

private void openCameraApi2(int width, int height) {Log.v(TAG, "---- openCameraAPi2();width: " + width + ";height: " + height);setUpCameraOutputs(width, height);CameraManager manager = (CameraManager) getSystemService(Context.CAMERA_SERVICE);try {if (!mCameraOpenCloseLock.tryAcquire(2500, TimeUnit.MILLISECONDS)) {throw new RuntimeException("Time out waiting to lock back camera opening.");}manager.openCamera(mCameraId, mStateCallback, mBackgroundHandler);} catch (CameraAccessException e) {e.printStackTrace();} catch (InterruptedException e) {throw new RuntimeException("Interrupted while trying to lock camera opening.", e);}}

private final CameraDevice.StateCallback mStateCallback = new CameraDevice.StateCallback() {@Overridepublic void onOpened(@NonNull CameraDevice cameraDevice) {initArcsoftDrawHelper();mCameraOpenCloseLock.release();mCameraDevice = cameraDevice;try {Thread.sleep(500);} catch (InterruptedException e) {e.printStackTrace();}createCameraPreviewSession();}@Overridepublic void onDisconnected(@NonNull CameraDevice cameraDevice) {mCameraOpenCloseLock.release();cameraDevice.close();mCameraDevice = null;}@Overridepublic void onError(@NonNull CameraDevice cameraDevice, int error) {mCameraOpenCloseLock.release();cameraDevice.close();mCameraDevice = null;finish();}};

private void createCameraPreviewSession() {try {SurfaceTexture texture = previewView.getSurfaceTexture();assert texture != null;// We configure the size of default buffer to be the size of camera preview we want.texture.setDefaultBufferSize(mPreviewSize.getWidth(), mPreviewSize.getHeight());// This is the output Surface we need to start preview.Surface surface = new Surface(texture);mPreviewRequestBuilder= mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);mPreviewRequestBuilder.addTarget(surface);mPreviewRequestBuilder.addTarget(mImageReader.getSurface());mCameraDevice.createCaptureSession(Arrays.asList(surface, mImageReader.getSurface()),new CameraCaptureSession.StateCallback() {@Overridepublic void onConfigured(@NonNull CameraCaptureSession cameraCaptureSession) {Log.v(TAG, "--- Camera2API:onConfigured();");if (null == mCameraDevice) {return;}mPreviewCaptureSession = cameraCaptureSession;try {mPreviewRequestBuilder.set(CaptureRequest.CONTROL_AF_MODE,CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);mPreviewRequest = mPreviewRequestBuilder.build();mPreviewCaptureSession.setRepeatingRequest(mPreviewRequest,null, mBackgroundHandler);} catch (CameraAccessException e) {e.printStackTrace();}}@Overridepublic void onConfigureFailed(@NonNull CameraCaptureSession cameraCaptureSession) {showToast("Failed");}}, null);} catch (CameraAccessException e) {e.printStackTrace();}}

private final ImageReader.OnImageAvailableListener mOnImageAvailableListener= new ImageReader.OnImageAvailableListener() {@Overridepublic void onImageAvailable(ImageReader reader) {Image image = reader.acquireLatestImage();if(image == null){return;}synchronized (mImageReaderLock) {if(!mImageReaderLock.equals(1)){Log.v(TAG, "--- image not available,just return!!!");image.close();return;}if (ImageFormat.YUV_420_888 == image.getFormat()) {Image.Plane[] planes = image.getPlanes();lock.lock();if (y == null) {y = new byte[planes[0].getBuffer().limit() - planes[0].getBuffer().position()];u = new byte[planes[1].getBuffer().limit() - planes[1].getBuffer().position()];v = new byte[planes[2].getBuffer().limit() - planes[2].getBuffer().position()];}if (image.getPlanes()[0].getBuffer().remaining() == y.length) {planes[0].getBuffer().get(y);planes[1].getBuffer().get(u);planes[2].getBuffer().get(v);if (nv21 == null) {nv21 = new byte[planes[0].getRowStride() * mPreviewSize.getHeight() * 3 / 2];}if(nv21 != null && (nv21.length != planes[0].getRowStride() * mPreviewSize.getHeight() *3/2)){return;}// 回传数据是YUV422if (y.length / u.length == 2) {ImageUtil.yuv422ToYuv420sp(y, u, v, nv21, planes[0].getRowStride(), mPreviewSize.getHeight());}// 回传数据是YUV420else if (y.length / u.length == 4) {ImageUtil.yuv420ToYuv420sp(y, u, v, nv21, planes[0].getRowStride(), mPreviewSize.getHeight());}//调用Arcsoft算法,绘制人脸信息drawFaceInfo(nv21);}lock.unlock();}}image.close();}};

3) CameraX API的使用:

private void startCameraX() {Log.v(TAG,"--- startCameraX();");mPreviewSize = new Size(640,480);setPreviewViewAspectRatio();initArcsoftDrawHelper();Rational rational = new Rational(mPreviewSize.getHeight(), mPreviewSize.getWidth());// 1. previewPreviewConfig previewConfig = new PreviewConfig.Builder().setTargetAspectRatio(rational).setTargetResolution(mPreviewSize).build();Preview preview = new Preview(previewConfig);preview.setOnPreviewOutputUpdateListener(new Preview.OnPreviewOutputUpdateListener() {@Overridepublic void onUpdated(Preview.PreviewOutput output) {previewView.setSurfaceTexture(output.getSurfaceTexture());configureTransform(previewView.getWidth(),previewView.getHeight());}});// 2. captureImageCaptureConfig imageCaptureConfig = new ImageCaptureConfig.Builder().setTargetAspectRatio(rational).setCaptureMode(ImageCapture.CaptureMode.MIN_LATENCY).build();final ImageCapture imageCapture = new ImageCapture(imageCaptureConfig);// 3. analyzeHandlerThread handlerThread = new HandlerThread("Analyze-thread");handlerThread.start();ImageAnalysisConfig imageAnalysisConfig = new ImageAnalysisConfig.Builder().setCallbackHandler(new Handler(handlerThread.getLooper())).setImageReaderMode(ImageAnalysis.ImageReaderMode.ACQUIRE_LATEST_IMAGE).setTargetAspectRatio(rational).setTargetResolution(mPreviewSize).build();ImageAnalysis imageAnalysis = new ImageAnalysis(imageAnalysisConfig);imageAnalysis.setAnalyzer(new MyAnalyzer());CameraX.bindToLifecycle(this, preview, imageCapture, imageAnalysis);}

private class MyAnalyzer implements ImageAnalysis.Analyzer {private byte[] y;private byte[] u;private byte[] v;private byte[] nv21;private ReentrantLock lock = new ReentrantLock();private Object mImageReaderLock = 1;//1 available,0 unAvailable@Overridepublic void analyze(ImageProxy imageProxy, int rotationDegrees) {Image image = imageProxy.getImage();if(image == null){return;}synchronized (mImageReaderLock) {if(!mImageReaderLock.equals(1)){image.close();return;}if (ImageFormat.YUV_420_888 == image.getFormat()) {Image.Plane[] planes = image.getPlanes();if(mImageReaderSize == null){mImageReaderSize = new Size(planes[0].getRowStride(),image.getHeight());}lock.lock();if (y == null) {y = new byte[planes[0].getBuffer().limit() - planes[0].getBuffer().position()];u = new byte[planes[1].getBuffer().limit() - planes[1].getBuffer().position()];v = new byte[planes[2].getBuffer().limit() - planes[2].getBuffer().position()];}if (image.getPlanes()[0].getBuffer().remaining() == y.length) {planes[0].getBuffer().get(y);planes[1].getBuffer().get(u);planes[2].getBuffer().get(v);if (nv21 == null) {nv21 = new byte[planes[0].getRowStride() * image.getHeight() * 3 / 2];}if(nv21 != null && (nv21.length != planes[0].getRowStride() * image.getHeight() *3/2)){return;}// 回传数据是YUV422if (y.length / u.length == 2) {ImageUtil.yuv422ToYuv420sp(y, u, v, nv21, planes[0].getRowStride(), image.getHeight());}// 回传数据是YUV420else if (y.length / u.length == 4) {nv21 = ImageUtil.yuv420ToNv21(image);}//调用Arcsoft算法,绘制人脸信息drawFaceInfo(nv21,mPreviewSize);}lock.unlock();}}}}

遇到的问题04

1)预览变形

这个是由于设置Camera预览的size和TextureView的size比例不一致导致。

我们一般会根据当前设备屏幕的size,遍历camera支持的preview size,找到适合当前设备的预览size,再根据当前预览size,动态调整textureView的显示。

2)Arcsoft Sdk Error 异常

中间遇到的关于Arcsoft sdk error异常的,可以在Arcsoft开发中心,帮助界面,输入对应的error code,根据提示信息,可以帮助我们快速定位排查问题。

1、本站所有资源均从互联网上收集整理而来,仅供学习交流之用,因此不包含技术服务请大家谅解!

2、本站不提供任何实质性的付费和支付资源,所有需要积分下载的资源均为网站运营赞助费用或者线下劳务费用!

3、本站所有资源仅用于学习及研究使用,您必须在下载后的24小时内删除所下载资源,切勿用于商业用途,否则由此引发的法律纠纷及连带责任本站和发布者概不承担!

4、本站站内提供的所有可下载资源,本站保证未做任何负面改动(不包含修复bug和完善功能等正面优化或二次开发),但本站不保证资源的准确性、安全性和完整性,用户下载后自行斟酌,我们以交流学习为目的,并不是所有的源码都100%无错或无bug!如有链接无法下载、失效或广告,请联系客服处理!

5、本站资源除标明原创外均来自网络整理,版权归原作者或本站特约原创作者所有,如侵犯到您的合法权益,请立即告知本站,本站将及时予与删除并致以最深的歉意!

6、如果您也有好的资源或教程,您可以投稿发布,成功分享后有站币奖励和额外收入!

7、如果您喜欢该资源,请支持官方正版资源,以得到更好的正版服务!

8、请您认真阅读上述内容,注册本站用户或下载本站资源即您同意上述内容!

原文链接:https://www.dandroid.cn/archives/21260,转载请注明出处。

评论0